Just over a year ago… In the previous blog post, I wrote about how you could write a Kubernetes validating admission webhook using Python.

That was a really fun challenge, getting both Kubernetes and Python to work together.

Kubernetes makes it easy to use and write webhooks, so even if you’re not a Kubernetes expert, the platform itself abstracts away most things. You only need to focus on the objective and which “problem” the webhook needs to solve.

In this article, you will learn how to easily create a mutating webhook to intercept Kubernetes objects and modify them on the fly.

Once again, I would mainly focus on the actual implementation and code. If you want to read more about mutating webhooks you can start here.

The logic for this particular webhook is nothing new, and it’s even available natively in Kubernetes. With that aside, the goal isn’t to reinvent the wheel but for this to serve as project-based learning.

What the webhook does is it intercepts Deployment(or similar) objects and automatically applies a predetermined nodeSelector label for the pods.

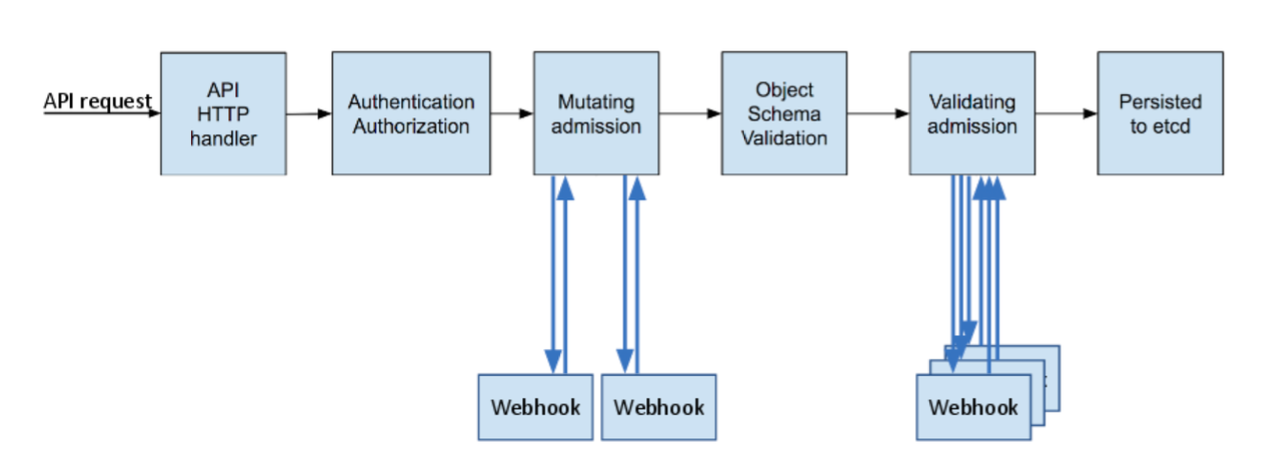

As its name implies it isn’t to just allow or block a request. Instead, it needs to modify the object before it passes through the next part of the chain and gets persisted in the etcd database.

The webhook is written with Python 3.10, and instead of Flask this time I’ve used the FastAPI framework. The Kubernetes cluster version that this was tested on is 1.25.0, set up using Docker Desktop.

Webhook

The code and project files are available on my GitHub here.

The mutating webhook requires a tiny bit more logic in your code for it to work, as opposed to the simpler – validating webhook.

So let’s break down the steps required for this to work:

- Configure mutating webhook config to intercept certain objects on ‘create’, and call the webhook to take action

- Inspect the request containing the payload

- Check if

nodeSelectorexists, if not, apply it using JSON patch - Return a modified admission response with patched data that will contain the set

nodeSelector

Or something along those lines…

As the most straightforward approach, I chose to supply the node selector label using an environment variable.

JSON Patch

Now, before I dive into the code let me explain how the actual patch operation works, which will be used to modify the request.

At the time of the writing of this blog, Kubernetes supports only one type of object patching – the JSON Patch.

A JSON Patch document is just a JSON file containing an array of patch operations. The patch operations supported by JSON Patch are “add”, “remove”, “replace”, “move”, “copy” and “test”. The operations are applied in order: if any of them fail then the whole patch operation should abort.

Example array containing a patch operation:

[{"op": "add", "path": "/spec/replicas", "value": 3}]Depending on the object, this will patch and set the replicas value to 3.

Note: There can be multiple patch operations in the array. You are not limited to one.

You specify the operation, the field, and the value. Simple right?

Admission response

The actual admission response will now contain two more fields. Compared to the previous one, for the validating webhook.

These two new fields are patchType and patch. With patchType set to JSONPatch and patch, containing the list of patch operations.

One more hoop needs to be jumped through, and that is the patch value in the response must be base64 encoded. Great.

You can take a look at the following AdmissionReview example:

{

"apiVersion": "admission.k8s.io/v1",

"kind": "AdmissionReview",

"response": {

"uid": "<value from request.uid>",

"allowed": true,

"patchType": "JSONPatch",

"patch": "W3sib3AiOiAiYWRkIiwgInBhdGgiOiAiL3NwZWMvcmVwbGljYXMiLCAidmFsdWUiOiAzfV0="

}

}You can read more about the response here.

Python Code

The code, finally…

The code is divided into three blocks, the POST endpoint, a helper function that will build the response, and one more function that will take care of the patching.

Of course, some basic things are added like logging and checks. Nothing too fancy. I kept the code as minimal and simple as possible. Feel free to (re)use the code and add more functionality to it.

Starting from the endpoint, you need to grab a couple of values from the incoming request. These are the request UID that needs to be sent back with the response, and the nodeSelector data in case there is an existing one.

@app.post("/mutate")

def mutate_request(request: dict = Body(...)):

uid = request["request"]["uid"]

selector = request["request"]["object"]["spec"]["template"]["spec"]

object_in = request["request"]["object"]

webhook.info(f'Applying nodeSelector for {object_in["kind"]}/{object_in["metadata"]["name"]}.')

return admission_review(

uid,

"Successfully added nodeSelector.",

True if "nodeSelector" in selector else False,

)

The patch function requires two arguments. The node_pool that will be set as a nodeSelector, and existing_selector bool check in case an existing label is present that needs to be replaced by the new one.

def patch(node_pool: str, existing_selector: bool) -> base64:

label, value = node_pool.replace(" ", "").split(":")

webhook.info(f"Got '{node_pool}' as nodeSelector label, patching...")

if existing_selector:

webhook.info(f"Found already existing node selector, replacing it.")

patch_operations = [Patch(op="replace", value={f"{label}": f"{value}"}).dict()]

else:

patch_operations = [Patch(op="add", value={f"{label}": f"{value}"}).dict()]

return base64.b64encode(json.dumps(patch_operations).encode())

In short, you get the node pool key-value pair, split it, and assign it to two separate variables.

You do a boolean check in case the field nodeSelector exists, and if it does instead of add you do a replace operation with the given value.

To do this more cleanly I created a patch model and defined the fields and the default path.

Finally, do a json.dumps() to properly format the array, encode it in base64, and return it.

def admission_review(uid: str, message: str, existing_selector: bool) -> dict:

return {

"apiVersion": "admission.k8s.io/v1",

"kind": "AdmissionReview",

"response": {

"uid": uid,

"allowed": True,

"patchType": "JSONPatch",

"status": {"message": message},

"patch": patch(pool, existing_selector).decode(),

},

}

At the admission response, pass the UID of the original request, an optional status/message, and at the very end call the patch function to generate the patch data.

The extra node selector field check is to make this clearer and log the actual label replacement. It can also be completely omitted and done silently.

Docker image

Nothing new in the Dockerfile either:

FROM python:3.10-slim-buster

WORKDIR /webhook

COPY requirements.txt /webhook

COPY main.py /webhook

COPY models.py /webhook

RUN pip install --no-cache-dir --upgrade -r /webhook/requirements.txt

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "5000","--ssl-keyfile=/certs/webhook.key", "--ssl-certfile=/certs/webhook.crt"]

Copy all the local files, and install the requirements.

However, in the last line, you’ll notice the two required SSL arguments. A certificate and key will need to be supplied to the uvicorn web server.

Generating SSL certificates

As I explained in the previous blog post, this is one of the most important things for the webhook to work.

Those certificates need to be set on the web server as well as with the MutatingWebhookConfiguration object.

Previously I generated the certs using openssl but this time laziness got the better of me and I used this website to take care of that.

The most important thing is to add the DNS entry that will match the webhook service DNS – {service-name}.{namespace}.svc.

In my case that was mutating-webhook.default.svc.

Writing the other certificate info is up to you.

Download the certificate in PEM format and the key. Convert them to base64, either online or in Linux using the innate base64 command.

cat webhook.crt | base64 | tr -d '\n'

You will need to add the certificate and key in a Secret. And those will need to be mounted on the webhook container. Don’t forget to add the (again) base64 encoded cert in the MutatingWebhookConfiguration

Kubernetes files

To fully deploy the webhook on a cluster you need a:

- Deployment

- Service

- Secret

- MutatingWebhookConfiguration

All the example files are available here.

To explain once more the certificate setup.

In the secret you need to add the certificate and keys:

apiVersion: v1 kind: Secret metadata: name: admission-tls type: Opaque data: webhook.crt: <YOUR BASE64 ENCODED CERT> webhook.key: <YOUR BASE64 ENCODED KEY>

At the webhook configuration:

[other data truncated] clientConfig: caBundle: <YOUR BASE64 ENCODED CERT>

In the deployment you mount the secret and set the path:

spec:

containers:

[other data truncated]

volumeMounts:

- name: certs-volume

readOnly: true

mountPath: "/certs"

volumes:

- name: certs-volume

secret:

secretName: admission-tls

Finally, the files need to match the uvicorn config:

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "5000","--ssl-keyfile=/certs/webhook.key", "--ssl-certfile=/certs/webhook.crt"]

With the above setup, you can build the image and deploy all the files.

What’s left would be to do a test run and check if everything works correctly.

Testing

I prepared a YAML file that will create Deployment, StatefulSet, and DaemonSet, all without labels. And one more Deployment that has an existing node selector label.

kubectl apply -f tests.yaml

deployment.apps/nginx-deployment created

statefulset.apps/nginx-sts created

daemonset.apps/nginx-ds created

deployment.apps/nginx-deployment-with-label createdLet’s check the webhook logs:

[2022-11-06 21:50:08,723] INFO: Applying nodeSelector for Deployment/nginx-deployment. [2022-11-06 21:50:08,723] INFO: Got 'kubernetes.io/hostname: docker-desktop' as nodeSelector label, patching pods... INFO: 192.168.65.3:33852 - "POST /mutate?timeout=20s HTTP/1.1" 200 OK [2022-11-06 21:50:08,785] INFO: Applying nodeSelector for StatefulSet/nginx-sts. [2022-11-06 21:50:08,786] INFO: Got 'kubernetes.io/hostname: docker-desktop' as nodeSelector label, patching pods... INFO: 192.168.65.3:33852 - "POST /mutate?timeout=20s HTTP/1.1" 200 OK [2022-11-06 21:50:08,839] INFO: Applying nodeSelector for DaemonSet/nginx-ds. [2022-11-06 21:50:08,840] INFO: Got 'kubernetes.io/hostname: docker-desktop' as nodeSelector label, patching pods... INFO: 192.168.65.3:33852 - "POST /mutate?timeout=20s HTTP/1.1" 200 OK [2022-11-06 21:50:08,888] INFO: Applying nodeSelector for Deployment/nginx-deployment-with-label. [2022-11-06 21:50:08,888] INFO: Got 'kubernetes.io/hostname: docker-desktop' as nodeSelector label, patching pods... [2022-11-06 21:50:08,888] INFO: Found already existing node selector, replacing it. INFO: 192.168.65.3:33852 - "POST /mutate?timeout=20s HTTP/1.1" 200 OK

Notice the last patch log… Since a selector was present, a new one wasn’t added but replaced along with an info log informing of the operation.

To double-check if the actual objects were mutated:

kubectl get deployment,sts,daemonset -o custom-columns='NAME:.metadata.name, SELECTOR:.spec.template.spec.nodeSelector' NAME SELECTOR mutating-webhook <none> nginx-deployment map[kubernetes.io/hostname:docker-desktop] nginx-deployment-with-label map[kubernetes.io/hostname:docker-desktop] nginx-sts map[kubernetes.io/hostname:docker-desktop] nginx-ds map[kubernetes.io/hostname:docker-desktop]

Success! Hope you enjoyed the read :).